Norman is how you run AI

blog

No Setup. No Queues. No DevOps. Deploy any AI model instantly.

You use AI, you’ve probably been using ChatGPT for a few years now. Maybe you’ve tried other models like Claude or Grok. Perhaps you’ve even experimented with lesser-known ones, like making a website logo with Janus Pro, structuring JSON with Phi 4, or transcribing data with Whisper 3. Kudos if you did, but most of us didn’t.

That’s because getting an AI model ready for use can take up to 2 years. In fact, every year, tens of thousands of machine learning models are created and forgotten, because they are stuck in GitHub repositories and research papers, never getting deployed for end users.

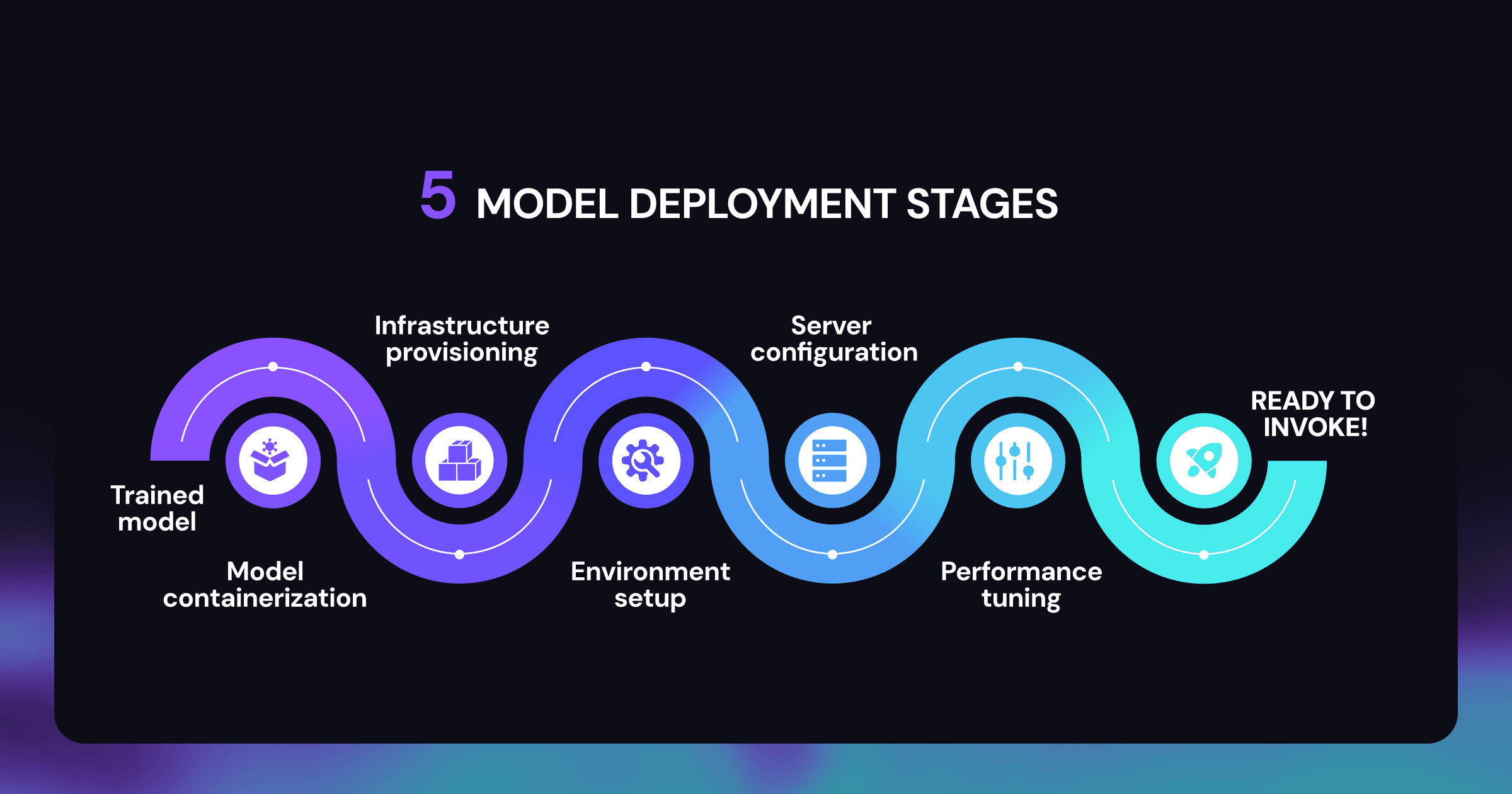

Turning raw models into usable inference endpoints requires expensive hardware, DevOps expertise and lots of free time that most research and development teams simply do not have. This causes developers to settle for a small set of models that are pre-deployed, because getting other models to work simply isn’t worth the effort.

We are here to change that. With Norman, you can turn any model into a production-grade endpoint in minutes.

Here’s how we do it:

Zero DevOps Deployment

Upload your model weight file and Norman will automatically deploy it to production in under five minutes.

Swap Models Effortlessly

Use one endpoint for all modalities. Vision, text, and audio - all share the same API and SDK.

Fire-and-Forget Inference

Don’t wait around for outputs. Once you submit a request, Norman will notify you when results are ready, so you can run many workflows in parallel.

Unlimited Input & Output

Enjoy complete freedom to work with data of any size, without counting tokens or worrying about duration, dimension, or length limits.

Live Model Catalog

Our catalog isn’t a static archive, as all our models are deployed and available for live inference.

How is this relevant for you:

Developers

Easily run any model without any setups or Devops. Swap between models with an identical API and SDK to find out which ones fit you best.

Businesses

Deploy a production-grade endpoint ready for scale in under five minutes, using your own models or any existing model out there.

Researchers

Publish your models with ease and focus on gathering valuable usage data to prove and improve your work.

What’s next?

Over the next few months, we’re expanding Norman in ways that make it easier than ever to use AI and integrate it in your workflows:

Broader SDK support.

We’re extending our SDKs to cover more languages - including JavaScript, Dart, Java, and C#. Whether you’re working on web, mobile, or backend systems, you’ll be able to integrate Norman directly in your code.

Richer data integration.

We’re adding support for more input formats like JSON, DOCX, PDF, and Google Docs, so you can connect your existing inputs and datasets without clunky casting, parsing or conversion.

More model formats.

You’ll soon be able to deploy models in TensorFlow and ONNX, alongside our existing PyTorch support, making it easier to bring your own models to the platform.

Built-in benchmarking.

Norman will be able to rank model performance against public benchmark datasets, and also against your own custom or proprietary ones, accelerating your analysis process and giving you meaningful insights into how your models perform.

A visual experience.

We’re building a new graphic web client that lets anyone explore models, test inputs, and compare results, no setup required. A simple way to experience AI in action.

Native mobile integration.

Our upcoming mobile client will allow passing inputs straight from your smartphone camera and microphone to any deployed model.

Conversational control.

A language model will soon serve as a gateway to Norman, letting you interact with models, data, and tools through a convenient chat interface.

Cross-model intelligence.

With upcoming MCP integration and mesh connectivity, models across Norman will be able to communicate, learn from each other, and create exponential value in an ecosystem of interconnected AI.

Norman

Write to us

+972547179776

MAIL TO